Wikipedia and public service broadcasters have quite a similar mission. We both provide information for the public. One project is encyclopedic by its nature, the other journalistic. But both strive to document the world, as it was yesterday, as it is today and as it unfolds towards the future.

The Finnish Broadcasting Company, Yle, has since April 1st 2016 tagged our online news and feature articles with concepts from Wikidata. This was a rather natural next step in a development that has been ongoing within Yle for several years now. But it is still an interesting choice of path for a public service media company.

With linking journalistic content and the wiki projects to each other in machine-readable format, we hope to gain win-win situations where we can fulfill our missions even better together.

Why Wikidata?

The short answer to why is, because Freebase is shutting down. We have since 2012 tagged our articles using external vocabularies. The first one we used was the KOKO upper ontology from the Finnish thesaurus and ontology service Finto. KOKO is an ontology that consists of nearly 50 000 core concepts. It is very broad and of high semantic quality. But it doesn’t include terms for persons, organizations, events and places.

Enter Freebase. We originally chose Freebase it 2012 quite pragmatically over the competition (Geonames, Dbpedia and others) mainly because of its very well functioning API interface. When Google in December 2014 announces that it would be shutting down Freebase we had to tackle this situation in some way.

The options were either to use Freebase offline and start building our own taxonomy for new terms, or to find a replacing knowledge base. And then there was the wild card of the promised Google Knowledge Graph API. The main replacing options were DBpedia and Wikidata, no other could compete with Freebase in scope.

Some experts said that the structure and querying possibilities in Wikidata where subpar compared to DBpedia. But for tagging purpose, especially for the news departments, it was far more important that new tags could be added either manually by us ourselves or by the Wikipedians.

During 2015 there really seemed to be a lot of buzz going on around Wikidata and inside the community. And when we contacted Wikimedia Finland we were welcomed with open arms to the community, and that also made all the difference. (Thank you Susanna Ånäs!) So with the support of Wikimedia and with their assurance that Yle-content fulfilled the notability demand we went ahead with the project.

Migration

In October 2015 we had tagged articles with 28 000 different Freebase concepts. Out of those over 20 000 could be directly found with Freebase-URIs in Wikidata. For the additional part, after some waiting for the promised migration support from Google, we started to map these together.

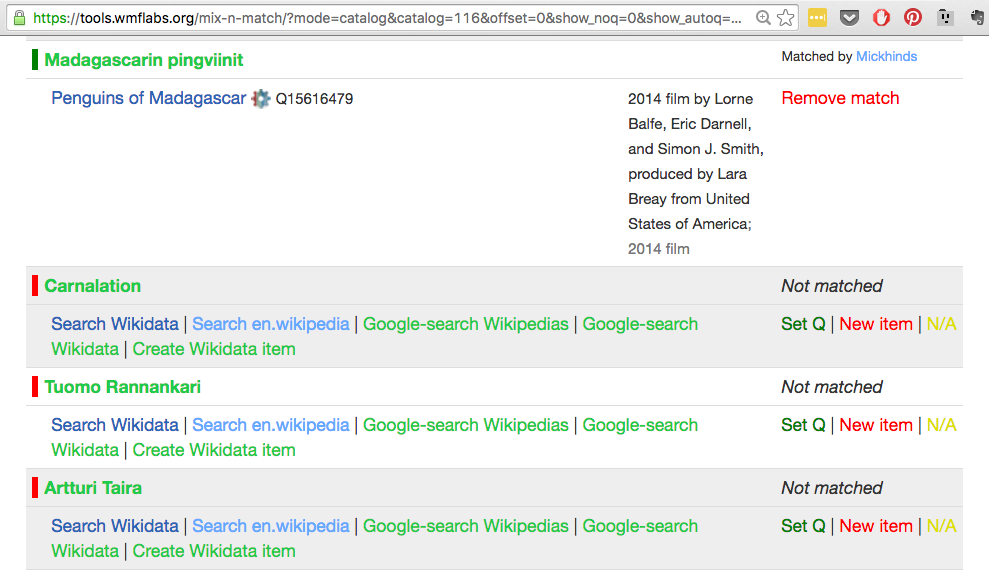

We got help from Wikidata to load them up to the Mix’n’match-tool. (Thank you Magnus Manske!) And mapped an additional 3800+ concepts. There are still 3558 terms unmapped at the time of writing, so please feel free to have a go at it. https://tools.wmflabs.org/mix-n-match/?mode=catalog&catalog=116#the_start

At the time of transition to Wikidata in April 2016 our editors had been very diligent and managed to use 7000 more Freebase concepts. So the final mapping still only covered around 72% of the Freebase terms used. But as we are using several sources for tagging, this is an ongoing effort in any case, and not a problem for our information management.

Technical implementation

We did the technical integration of the Wikidata annotation service in our API-architecture at Yle. Within it we have our “Meta-API” that gathers all the tags used for describing Yle-content. When the API is called it returns results from Wikidata, KOKO and a third commercial source vocabulary we are using. Since those three vocabularies overlap partially they need to be mapped / bridged to each other (e.g. country names can be found in all three sources). The vocabulary is maintained under supervision of producer Pia Virtanen.

The API-call towards Wikidata returns results that have labels in at least Finnish, Swedish or English. Wikidata still lacks term descriptions in Swedish and Finnish to a rather high degree, so we also fetch the first paragraph from Wikipedia to provide disambiguation information to the editors that do the annotation.

The Meta-API is then implemented in the CMSs. For example in the Drupal7 sites widely used within Yle we have an own module, YILD https://www.drupal.org/project/yild, which can be used for tagging towards any external source.

The article featured in the demo video above: http://svenska.yle.fi/artikel/2016/04/15/kerry-usa-hade-ratt-att-skjuta-ner-ryska-plan

The UX encourages a workflow where the editor first inserts the text, and then manually chooses a few primary tags. After that they can by pushing a button fetch automatic annotation suggestions, which returns approximately a dozen more tags. From these the editor can select the suitable ones.

The tags are then used in the article itself, they create automatic topical pages, are used in curated topical pages as subject headings and navigation, and bring together Yle-content produced in different CMSs and organizational units in different languages. They are used in our News app Uutisvahti/Nyhetskollen. And the tags are also printed out in the source code according to schema.org specifications, mainly for SEO.

The opening of Yle’s APIs are on the road map for Yle’s internet development. Once we can provide this metadata together with data about our articles and programs, third party developers can build new and specialized solutions for specific uses and audiences.

One suggested application would be to build a “Wikipedia source finder”. So that if a Wikipedian finds a stub article, they could look up what material Yle has about the topic, and complete the article with Yle as a source.

Wikidata for annotation

We still at the time of writing only have a couple of weeks experience with annotating our content using Wikidata. Compared to Freebase there seems to be far more local persons like artists and politicians, as well as more local places and events.

For breaking news stories the Wiki-community is of great help. In a sense the wiki-community is crowdsourcing new tags. For example events like the 2016 Brussels bombings or the Panama papers leak get articles written very fast after the events have taken place. Thus creating a Wikidata-item, and a tag for us. This also gives the added benefit of a normalized label and description for the events.

In Norway several media companies, including our fellow broadcaster NRK, have initiated collaboration in specifying how important news events could be called in a unified and homogenous way. During the tragic attacks in Norway in 2011 there were over 200 different labels created and used in media for this event during the first day.

Through Wikidata we can normalize this information for all the languages we use, Finnish, Swedish and English. And we have the option to expand to any of the dozens of languages active in the Wiki-projects.

Wikidata and public service

Apart from all the technical factors that fulfil their tasks, there is another side to using Wikidata as well. It feels that the Wiki-projects and public service companies have a lot in common in their ethos and mission, but not too much to be in a competitive relationship.

The web is increasingly a place of misinformation and commercial actors monetizing on user data. It feels in accordance increasingly important to tie independent public service media, to the free-access, free-content information structure built by the public that constitutes the wiki projects. As content becomes more and more platform agnostic and data driven, this strategy seems a good investment for the future of independent journalism.