GLAMWiki Toolset project is a collaboration between various Wikimedia chapters and Europeana. The goal of the project is to provide easy-to-use tools to make batch uploads of GLAM (Galleries, Libraries, Archives & Museums) content to Wikimedia Commons. Wikimedia Finland invited the senior developer of the project, Dan Entous, to Helsinki to hold a GW Toolset workshop for the representatives of GLAMs and staff of Wikimedia Finland on 10th September. The workshop was first of its kind outside Netherlands.

GLAMWikiToolset training in Helsinki. Photo: Teemu Perhiö. CC-BY

I took part in the workshop in the role of tech assistant of Wikimedia Finland. After the workshop I have been trying to figure out what is needed for using the toolset from a GLAM perspective. In this text I’m concentrating on the technical side of these requirements.

What is needed for GWToolset?

From a technical point of view, the use of GWToolset can be split into three sections. First there are things that must be done before using the toolset. The GWToolset requires metadata as a XML file that is structured in a certain way. The image files must also be addressable by direct URLs and the domain name of the image server must be added to the upload whitelist in Commons.

The second section concerns practices in Wikimedia Commons itself. This means getting to know the templates, such as institution, photograph, artwork and other templates, as well as finding the categories that are suitable for uploaded material. For someone who is not a Wikipedian – like myself – it takes a while to get know the templates and especially the categories.

The third section is actually making the uploads by using the toolset itself, which I find easy to use. It has a clear workflow and with little assistance there should be no problems for GLAMs using it. Besides, there is a sandbox called Commons Beta where one can rehearse before going public.

I believe that the bottleneck for GLAMs is the first section: things that must be done before using the toolset. More precisely, creating a valid XML file for the toolset. Of course, if an organisation has a competent IT department with resources to work with material donations to Wikimedia Commons, then there is no problem. However, this could be a problem for smaller – and less resourceful – organisations.

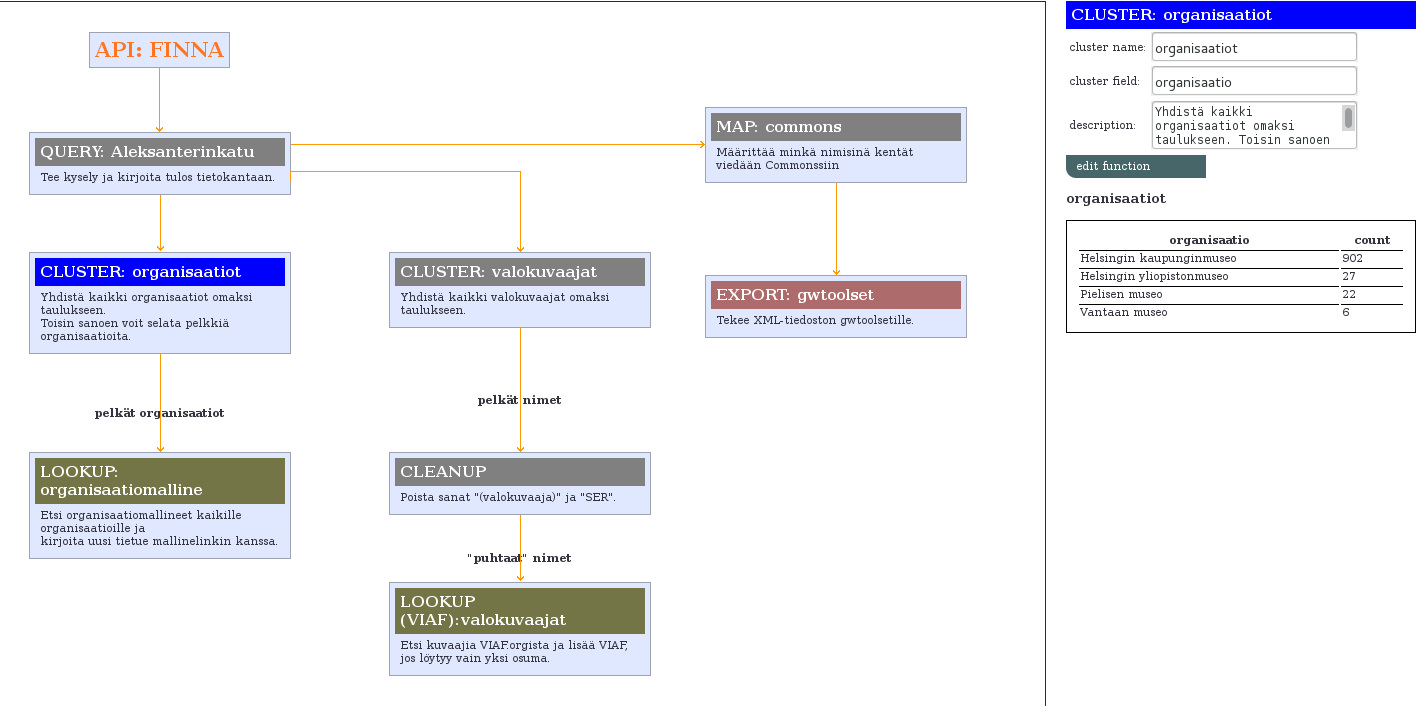

Converting metadata in practise

Like I said, the GWToolset requires an XML file with a certain structure. As far as I know, there is no information system that could directly produce such a file. However, most of the systems are able to export metadata in XML format. Even though the exported file is not valid for GWToolset, it can be converted into such with XSLT.

XSLT is designed to this specific task and it has a very powerful template mechanism for XML handling. This means that the amount of code stays minimal compared to any other options. The good news is that XML transformations are relatively easy to do.

XSLT is our friend when it comes to XML manipulation.

In order to learn what is needed for such transforms with real data, I made couple of practical demos. I wanted to create a very lightweight solution for transforming the metadata sets for the GWToolset. Modern web browsers are flexible application platforms and for example web-scraping can be done easily through Javascript.

A browser-based solution has many advantages. The first is that every Internet user already has a browser. So there is no downloading, installing or configuring needed. The second advantage is that browser-based applications that use external datasets do not create traffic to the server where the application is hosted. Browsers can also be used locally. This allows organisations to download the page files, modify them, make conversions locally in-house, and have their materials on Wikimedia Commons.

XSLT requires of course a platform to run. There is a javascript library called Saxon-CE that provides the platform for browsers. So, a web browser offers all that is needed for metadata conversions: web scraping, XML handling and conversions through XSLT, and user interface components. Of course XSLT files can also be run in any other XSLT environment, like xsltproc.

Demos

Blenda and Hugo Simberg, 1896. source: The National Gallery of Finland, CC BY 4.0

The first demo I created uses an open data image set published by the Finnish National Gallery. It consists of about one thousand digitised negatives of and by Finnish artist Hugo Simberg. The set also includes digitally created positives of images. The metadata is provided as a single XML file.

The conversion in this case is quite simple, since the original XML file is flat (i.e. there are no nested elements). Basically the original data is passed through as it is with few exceptions. The “image” element in original metadata includes only an image id, which must be expanded to a full URL. I used a dummy domain name here, since images are available as a zip-file and therefore cannot be addressed individually. Another exception is the “keeper” element, which holds the name of the owner organisation. This was changed from the Finnish name of the National Gallery to a name that corresponds to their institutional template name in Wikimedia Commons.

example record:

http://opendimension.org/wikimedia/simberg/xml/simberg_sample.xml

source metadata:

http://www.lahteilla.fi/simberg-data/#/overview

conversion demo:

http://opendimension.org/wikimedia/simberg/

direct link to the XSLT:

http://opendimension.org/wikimedia/simberg/xsl/simberg_clean.xsl

Photo: Signe Brander. source: Helsinki City Museum, CC BY-ND 4.0

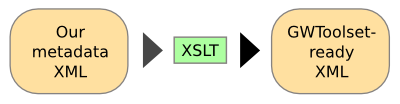

In the second demo I used the materials provided by the Helsinki City Museum. Their materials in Finna are licensed with CC-BY-ND 4.0. Finna is an “information search service that brings together the collections of Finnish archives, libraries and museums”. Currently there is no API to Finna. Finna provides metadata in LIDO format but there is no direct URL to the LIDO file. However, LIDO can be extracted from the HTML.

The LIDO format is a deep format, so the conversion is mostly picking the elements from the LIDO file and placing them in a flat XML file. For example, the name of the author in LIDO is in a quite deep structure.

example LIDO record:

http://opendimension.org/wikimedia/finna/xml/example_LIDO_record.xml

source metadata:

https://hkm.finna.fi/

conversion demo:

http://opendimension.org/wikimedia/finna/

(Please note that the demo requires that the same-origin-policy restrictions are loosened in the browser. The simplest way to do this is to use Google Chrome by starting it with a switch “disable-web-security”. In Linux that would be: google-chrome — disable-web-security and Mac (sorry, I can not test this) open -a Google\ Chrome –args –disable-web-security. For Firefox see this:http://www-jo.se/f.pfleger/forcecors-workaround)

direct link to the XSLT:

http://www.opendimension.org/wikimedia/finna/xsl/lido2gwtoolset.xsl

Conclusion

These demos are just examples, no actual data has yet been uploaded to Wikimedia Commons. The aim is to show that XML conversions needed for GWToolset are relatively simple and that in order to use GWToolset the organisation does not have to have an army of IT-engineers.

The demos could be certainly better. For example, the author name must be changed to reflect the author name in Wikimedia Commons. But again, that is just a few lines in XSLT and that is done.